Technological Progress and Innovation in early Twentieth Century America

I’ve said a few times that the 1930s were the peak of technological innovation in the United States. I think any serious look at the history of science and technology will make this clear.

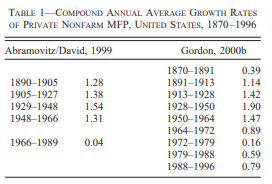

But, you can also come at this from another angle. I always find it valuable to look for intersecting lines of evidence using difference approaches and bodies of knowledge. Alexander Field’s paper “The Most Technologically Progressive Decade of the Century” is one such differing approach, using economic data.

Field uses multifactor or total factor productivity as a measure of innovation, the secret sauce that makes real economic growth possible beyond merely accumulating more people.

Field gets into the details of all of this in the paper, and I encourage you to read it. I gather that many economists don’t like TFP on a conceptual level, but I find their arguments as unpersuasive as biostatisticians who complain that Number Needed to Treat doesn’t have good statistical properties, but who completely ignore what the numbers say about the way we practice medicine. Data with “bad” properties can still tell you something!

In economics, current innovation is wholly underwhelming, but to state this forthrightly usually invites derision. For example:

Paul Krugman wrote in 1998, “The growth of the Internet will slow drastically, as the flaw in ‘Metcalfe’s law’—which states that the number of potential connections in a network is proportional to the square of the number of participants—becomes apparent: most people have nothing to say to each other! By 2005 or so, it will become clear that the Internet’s impact on the economy has been no greater than the fax machine’s.”

You can readily find people heaping abuse on Krugman for saying this, but he was right! The innovative impact of the Internet has not panned out at all. That this is true isn’t really in doubt. There is lots of arguments about why, but that it happened is absolutely clear.

Comments ()