Welcome to BenEspen.com

I host both my personal blog, With Both Hands, and the work of the late John J. Reilly, The Long View.

After John’s untimely death in 2012, I copied his website, and re-post his blogs and essays as part of The Long View Re-posting Project. My aim is to not only preserve his works and his memory, but to extend it. I try to add in a quantitative sense to John’s depth in cyclical theories of history and esoteric movements. There wasn’t anyone else with quite his particular set of interests. The single most popular page on my website is John’s tongue-in-cheek self-published book Spengler’s Future, which used a very simple program in BASIC to predict the next seven centuries.

My own blog is primarily about my hobbies, rather than my work in medical devices, pharmaceuticals, and biotechnology, which is largely trade secret and company confidential. However, my career as an engineer, inventor, and innovator definitely colors my hobbies, such as my most popular essay, What Works in Healthcare?

I accept requests to review books in dead tree, ebook, and audiobook formats. You can contact me by emailing ben@ this domain. My book reviews tend to favor the esoteric reading, which means for works of fiction they are light on characterization and plot structure, but I’ll have a lot to say about millenarian cults, very applied psychology, and obscure historical analogies. I do non-fiction as well, but the same caveat remains.

Latest

The Long View 2009-04-24: Witch Hunts & Purges

Trap Line by Timothy Zahn

WBH Digest 2025-04-25: The Campaign Continues

The Uses of Convention

WBH Digest 2025-02-14: Saint Valentine's Day

WBH Digest 2025-01-17: Agony and Ecstasy

The Long View

The Long View 2009-04-24: Witch Hunts & Purges

The Long View 2009-04-17: Spengler at the Zombies' Tea Party

The Long View 2009-04-10

WBH Digest 2024-11-17: The Perennial Apocalypse

The Long View: American Babylon

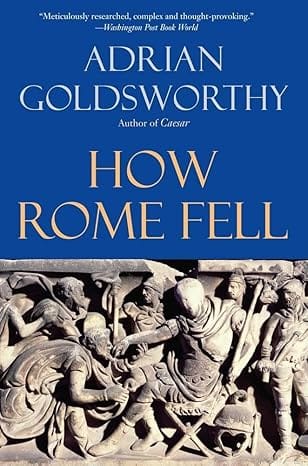

The Long View: How Rome Fell